Detecting a squash ball using OpenCV

I love the intersection of sport and stats and have wanted to see something in the squash space for a while. My crazy ambitious goal was to output stats on things like the average height on the front wall per player or the average distance from the wall of shots. I realised in order to do this I'd need to be able to detect a ball in a video. So, in my (not very frequent) spare time I've been working on trying to use computer vision to detect a squash ball in a video. After seeing this post on Player Tracking in Squash, I was inspired to write up where I've got to.

Note: The code is available online at gitlab

Given I know python I used that and the OpenCV library for computer vision. With this I'm able to read in a video and process it frame by frame. A quick disclaimer, I code in python for a living but have no experience with OpenCV or anything related to computer vision. The code I've written is the result of a lot of trial and error and gradual improvements. I am certainly not claiming this is the best or even a good way to detect a squash ball!

The code tries to seperate the "background" of the video, in our case the court from the moving objects. The moving objects should be the "foreground" and in our case should be the players and the balls. When the camera angle is fixed (i.e. the background remains the same throughout the video clip) there are reasonably good algorithms for doing this. I'm using a function called createBackgroundSubtractorKNN which is a "K-nearest neighbours based Background/Foreground Segmentation algorithm". As the video plays it gets more an more information about what is background and what is foreground. My mental model of the algorithm is that if a given pixel is the same as it has always been then it is background. If it is different to normal then it is foreground.

After apply the background detection on a frame of the video we get an images where pixels that are the background are in black, and those in the foreground are white. You can see in the below image we have two white blobs that are reasonably obviously squash player (along with their shadows), and you can just about make out a smudge that is where the ball is.

The above image is reasonably hard to work with, since there is a fair bit of noise. To make the image slightly easier to work with I run a process that tries to remove this noise (small dots around the shadow). In code terms I erode the image and then dilate it. The below image (from a different point in the video), shows a cleaner (more "blocky") version.

In the above image the ball is above the head of the leftmost player. However, you can also see that there is 1 other potentiall ball. It is our intuition that means we can rule out the lower "ball", but this is something I haven't been able to codify yet. I haven't done anything with machine learning, but from what I've read it feels like it would be reasonably easy to train a model that would accurately choose the ball. This might be what I look at in the future.

From this image we detect the "contours", which "can be explained simply as a curve joining all the continuous points (along the boundary), having same color or intensity". When things go well, we can filter out the two large contours and be left with just one for the ball. Obviously, things rarely go this well, and I've had various attempts to figure out which of the contours is actually the ball.

The code that generates the video below is very naive and just tries to filter out any contours that are too large to be the ball, or where the radius isn't within a reasonable size. If this naive filtering returns a single contour then I draw that onto the video. If it returns more than one then for now we simply don't draw anything. You can see from the below it does a reasonable of following the ball, but there is definitely room for improvement.

The "final" result

The following video is just a couple of rallies from a video I shot at the junior tournament at my local club. By filming myself I can control the camera angle (I only have one camera!). The background detection algorithm keeps a history of previous frames (in my case up to 400) that it uses to separate the foreground and the background. When the camera angle changes that history is now wrong and there is a period of time where almost everything is seen as foreground and nothing is seen as background.

Next steps

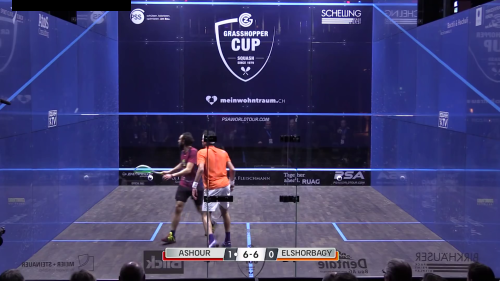

I'd love to be able to run this algorithm on some squashtv videos. I won't be able to do anything about the changing camera angles, but there is room for improvement with the detection of the squash ball. The glass courts used by the pros are amazing but the reflections and the crowd movement through the glass make the ball detection much harder.

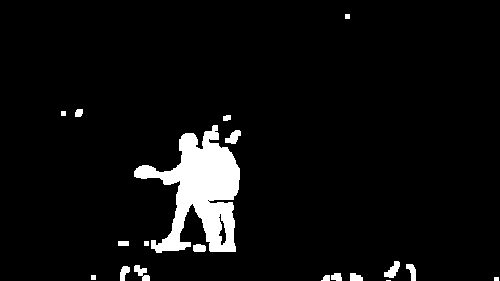

If you take a look at around 25 seconds into this clip (Ashour v ElShorbagy from the Grasshopper Cup) you can see the reflection of the ball on the side wall. This following image gets processed as follows.

The ball and its reflection are the two dots 1/3rd of the way down the left hand side of the image. You can also see random other bits of noise. My goal is to have something capable of detecting the ball from that noisy image.